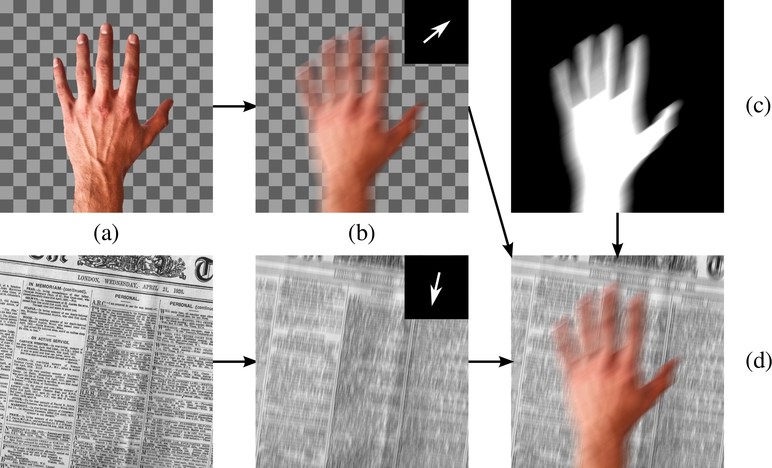

This work is based on a generative model of scenes with motion blur. The sharp layers (a) are blurred (b) using the motion indicated in the top right corners. Together with the blurred layer segmentation (c), an image (d) with complex spatially varying motion blur is generated. The checkerboard pattern indicates transparency.

Motion Blur in Layers

Jonas Wulff,

Michael J. Black

Videos contain complex spatially-varying motion blur due to the combination of object motion, camera motion, and depth variation with finite shutter speeds. Existing methods to estimate optical flow, deblur the images, and segment the scene fail in such cases. In particular, boundaries between differently moving objects cause problems, because here the blurred images are a combination of the blurred appearances of multiple surfaces. We address this with a novel layered model of scenes in motion. From a motion-blurred video sequence, we jointly estimate the layer segmentation and each layer’s appearance and motion. Since the blur is a function of the layer motion and segmentation, it is completely determined by our generative model. Given a video, we formulate the optimization problem as minimizing the pixel error between the blurred frames and images synthesized from the model, and solve it using gradient descent. We demonstrate our approach on synthetic and real sequences.

Data (synthetic and real video sequences and ground truth optical flow for the synthetic sequences)